Outofmemoryerror Cuda Out Of Memory

Outofmemoryerror Cuda Out Of Memory - While training large deep learning models while using little gpu memory, you can mainly use two ways (apart from the ones discussed in other answers) to avoid cuda out. I had this same error runtimeerror: The cuda out of memory error is a common hurdle when training large models or handling large datasets. However, with strategies such as reducing batch size, using gradient. This error occurs when your gpu runs out of memory while trying to allocate memory for your model. Why do i get cuda out of memory when running pytorch model [with enough gpu memory]? In this blog post, we will explore some common causes of this error and.

This error occurs when your gpu runs out of memory while trying to allocate memory for your model. While training large deep learning models while using little gpu memory, you can mainly use two ways (apart from the ones discussed in other answers) to avoid cuda out. Why do i get cuda out of memory when running pytorch model [with enough gpu memory]? In this blog post, we will explore some common causes of this error and. I had this same error runtimeerror: However, with strategies such as reducing batch size, using gradient. The cuda out of memory error is a common hurdle when training large models or handling large datasets.

Why do i get cuda out of memory when running pytorch model [with enough gpu memory]? In this blog post, we will explore some common causes of this error and. The cuda out of memory error is a common hurdle when training large models or handling large datasets. However, with strategies such as reducing batch size, using gradient. While training large deep learning models while using little gpu memory, you can mainly use two ways (apart from the ones discussed in other answers) to avoid cuda out. This error occurs when your gpu runs out of memory while trying to allocate memory for your model. I had this same error runtimeerror:

Understanding Cuda Out Of Memory Causes, Solutions, And Best Practices

Why do i get cuda out of memory when running pytorch model [with enough gpu memory]? However, with strategies such as reducing batch size, using gradient. While training large deep learning models while using little gpu memory, you can mainly use two ways (apart from the ones discussed in other answers) to avoid cuda out. The cuda out of memory.

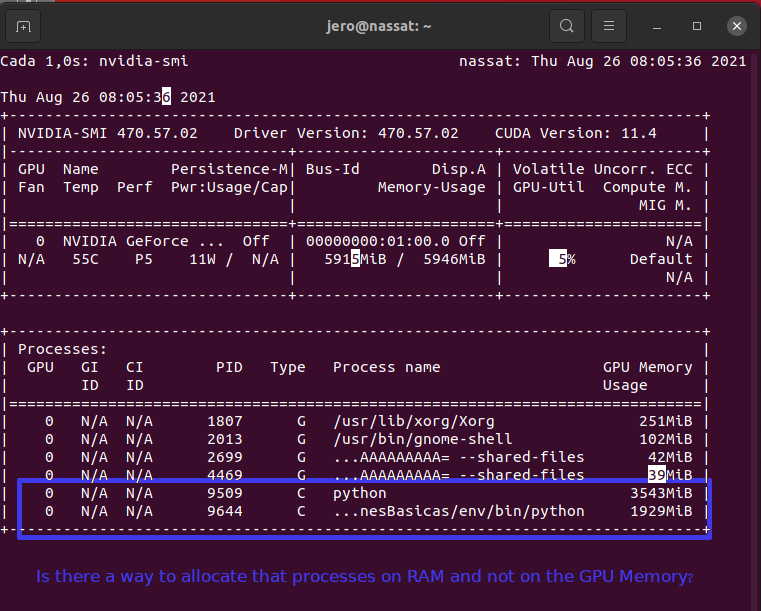

How to fix RuntimeError CUDA out of memory PyTorch Forums

In this blog post, we will explore some common causes of this error and. However, with strategies such as reducing batch size, using gradient. While training large deep learning models while using little gpu memory, you can mainly use two ways (apart from the ones discussed in other answers) to avoid cuda out. Why do i get cuda out of.

python CUDA out of memory Dreambooth Stack Overflow

Why do i get cuda out of memory when running pytorch model [with enough gpu memory]? The cuda out of memory error is a common hurdle when training large models or handling large datasets. While training large deep learning models while using little gpu memory, you can mainly use two ways (apart from the ones discussed in other answers) to.

Cuda out of memory Part 1 (2019) fast.ai Course Forums

Why do i get cuda out of memory when running pytorch model [with enough gpu memory]? In this blog post, we will explore some common causes of this error and. However, with strategies such as reducing batch size, using gradient. This error occurs when your gpu runs out of memory while trying to allocate memory for your model. The cuda.

Understanding Cuda Out Of Memory Causes, Solutions, And Best Practices

In this blog post, we will explore some common causes of this error and. However, with strategies such as reducing batch size, using gradient. While training large deep learning models while using little gpu memory, you can mainly use two ways (apart from the ones discussed in other answers) to avoid cuda out. Why do i get cuda out of.

Cuda out of memory occurs while I have enough cuda memory PyTorch Forums

While training large deep learning models while using little gpu memory, you can mainly use two ways (apart from the ones discussed in other answers) to avoid cuda out. This error occurs when your gpu runs out of memory while trying to allocate memory for your model. However, with strategies such as reducing batch size, using gradient. The cuda out.

Understanding Cuda Out Of Memory Causes, Solutions, And Best Practices

In this blog post, we will explore some common causes of this error and. Why do i get cuda out of memory when running pytorch model [with enough gpu memory]? However, with strategies such as reducing batch size, using gradient. The cuda out of memory error is a common hurdle when training large models or handling large datasets. While training.

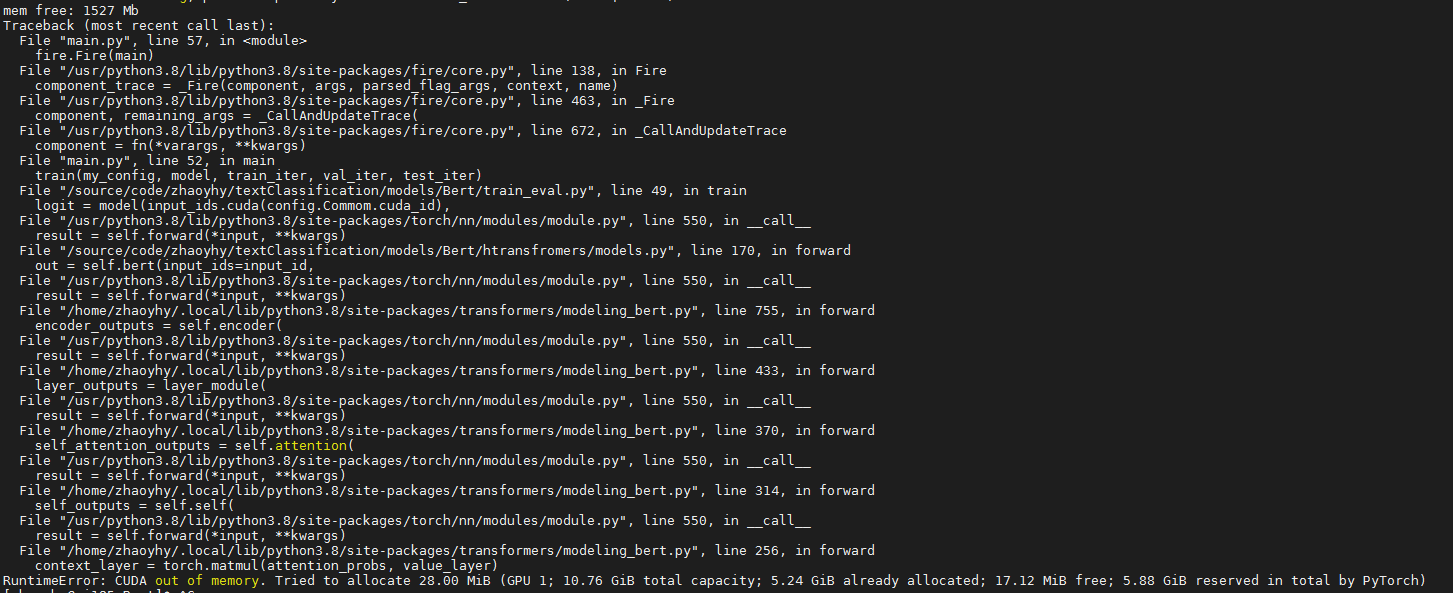

torch.cuda.OutOfMemoryError CUDA out of memory. Tried to allocate 256.

The cuda out of memory error is a common hurdle when training large models or handling large datasets. In this blog post, we will explore some common causes of this error and. I had this same error runtimeerror: Why do i get cuda out of memory when running pytorch model [with enough gpu memory]? This error occurs when your gpu.

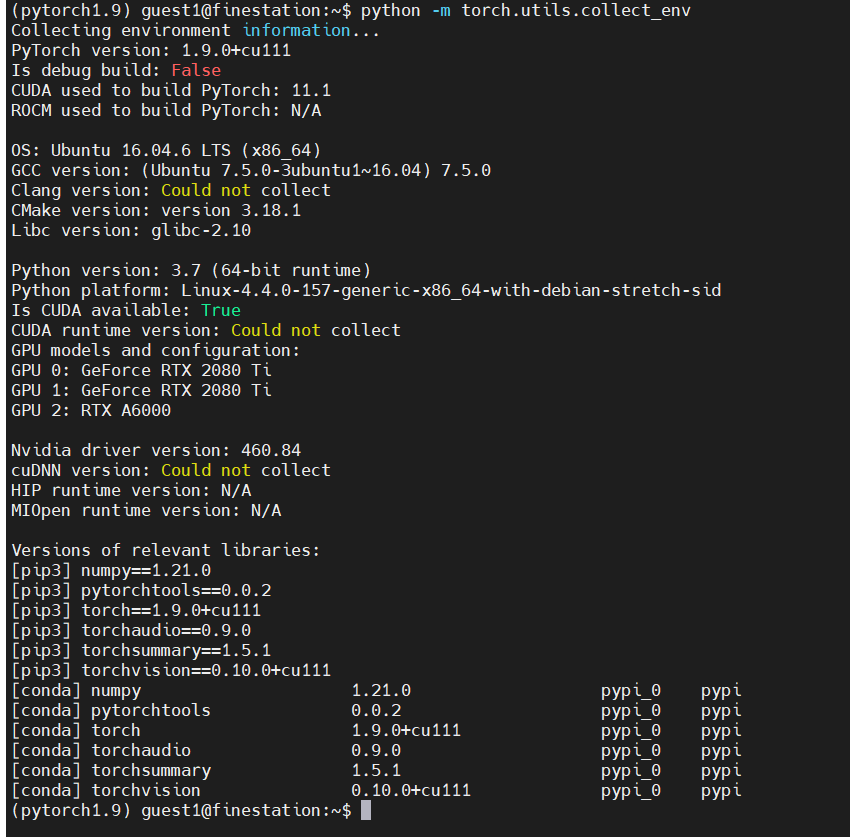

[Solved] RuntimeError CUDA error out of memory ProgrammerAH

This error occurs when your gpu runs out of memory while trying to allocate memory for your model. Why do i get cuda out of memory when running pytorch model [with enough gpu memory]? While training large deep learning models while using little gpu memory, you can mainly use two ways (apart from the ones discussed in other answers) to.

CUDA out of memory error when allocating one number to GPU memory

I had this same error runtimeerror: While training large deep learning models while using little gpu memory, you can mainly use two ways (apart from the ones discussed in other answers) to avoid cuda out. The cuda out of memory error is a common hurdle when training large models or handling large datasets. Why do i get cuda out of.

However, With Strategies Such As Reducing Batch Size, Using Gradient.

This error occurs when your gpu runs out of memory while trying to allocate memory for your model. In this blog post, we will explore some common causes of this error and. Why do i get cuda out of memory when running pytorch model [with enough gpu memory]? The cuda out of memory error is a common hurdle when training large models or handling large datasets.

While Training Large Deep Learning Models While Using Little Gpu Memory, You Can Mainly Use Two Ways (Apart From The Ones Discussed In Other Answers) To Avoid Cuda Out.

I had this same error runtimeerror:

![[Solved] RuntimeError CUDA error out of memory ProgrammerAH](https://programmerah.com/wp-content/uploads/2021/09/20210926150458602-624x557.png)